- Burp suite spider metaploitable 2 login submit form how to#

- Burp suite spider metaploitable 2 login submit form software#

- Burp suite spider metaploitable 2 login submit form code#

- Burp suite spider metaploitable 2 login submit form download#

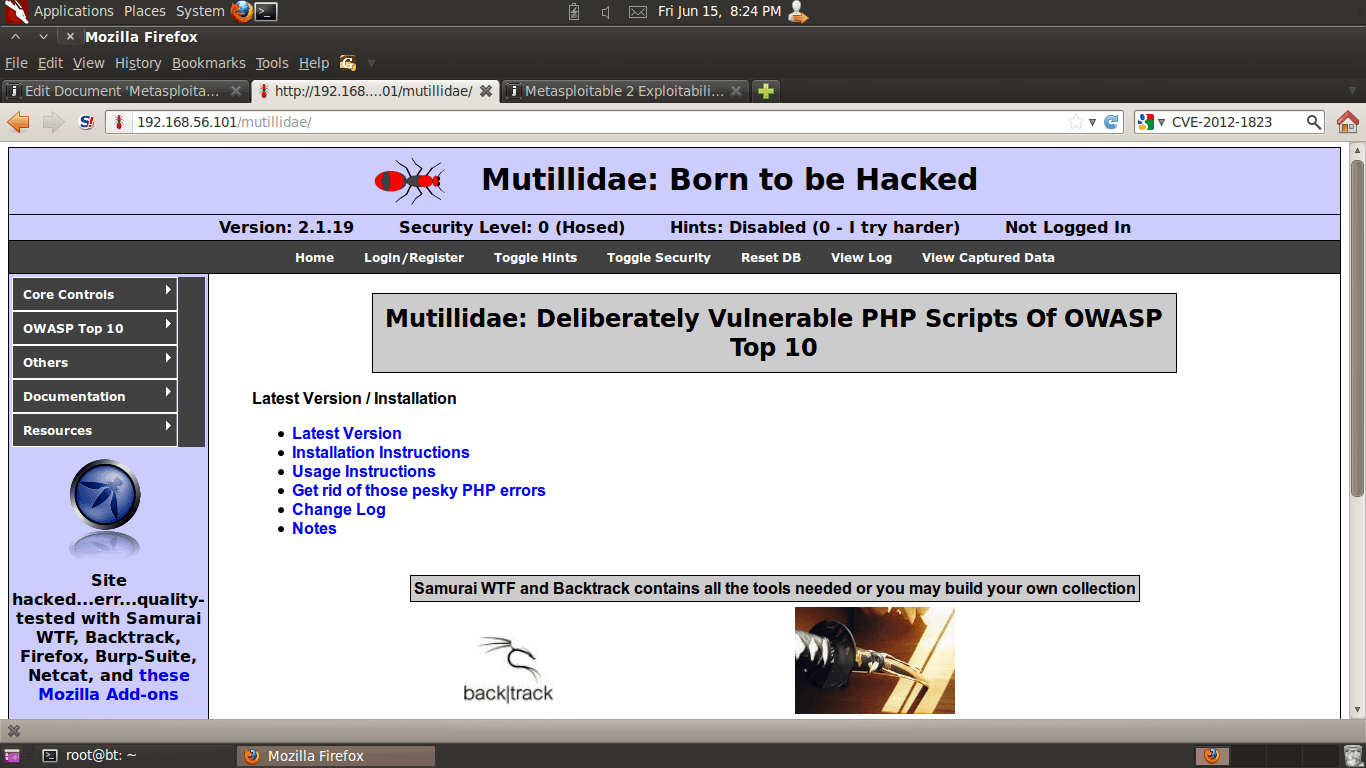

I have used this as bases for all my web hacking tutorials on Hemps T utorials. This will run you through setting up a vulnerable virtual machine and installing DVWA. If you want to follow along and have not yet got DVWA setup, take a look at this tutorial on Setting up a Vulnerable LAMP Server.

Burp suite spider metaploitable 2 login submit form how to#

Don’t say you were not warned.In this tutorial, I will show you how to beat the Low, Medium and Hard levels of the brute force challenge within DVWA (Dame Vulnerable Web App). Recursive retrieval should be used with care.

Burp suite spider metaploitable 2 login submit form download#

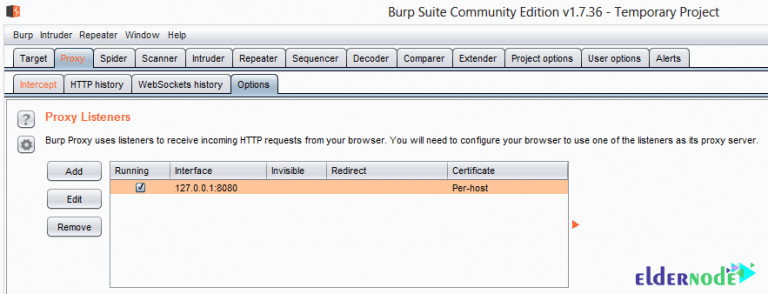

The download will take a while longer, but the server administrator will not be alarmed by your rudeness. When downloading from Internet servers, consider using the ‘-w’ option to introduce a delay between accesses to the server. Because of that, many administrators frown upon them and may ban access from your site if they detect very fast downloads of big amounts of content. You should be warned that recursive downloads can overload the remote servers. It is also useful for WWW presentations, and any other opportunities where slow network connections should be bypassed by storing the files locally. Recursive retrieving can find a number of applications, the most important of which is mirroring. The default maximum depth is five layers.īy default, Wget will create a local directory tree, corresponding to the one found on the remote server. The maximum depth to which the retrieval may descend is specified with the ‘-l’ option. We refer to this as to recursive retrieval, or recursion. GNU Wget is capable of traversing parts of the Web (or a single HTTP or FTP server), following links and directory structure. Wget can recursively download a site (similar to httrack) 6) burp suiteīurpsuite has a spider built in, you can right-click on a request and ‘send to spider’ 7) wget -r wget -r Httrack will mirror the site for you, by visiting and downloading every page that it can find. You might want to check my metasploit posts 5) httrack httrack –O ~/Desktop/file Msf auxiliary(msfcrawler) > set rhosts msf auxiliary(msfcrawler) > exploit

Burp suite spider metaploitable 2 login submit form software#

4) website crawler software kali Linux – metasploit: use auxiliary/crawler/msfcrawler

Generation of a file for next stats analysis.

Burp suite spider metaploitable 2 login submit form code#

JavaScript source code analyzer: Evaluation of the quality/correctness of the JavaScript with JavaScript Lint Hybrid analysis/Crystal ball testing for PHP application using PHP-SAT

Simple AJAX check (parse every JavaScript and get the URL and try to get the parameters) SQL Injection (there is also a special Blind SQL Injection module) It’s a very basic tool, and is only recommended for small sites. Grabber can spider and test for sqli and xss. 3) grabber grabber -spider 1 -sql -xss -url Skipfish is included in kali linux and will spider a site for you (and can also test for various vulnerable parameters and configurations) – using the -O flag will tell skipifsh not to submit any forms, and -Y will tell skipfishn not to fuzz directories Screaming frog is a well known tool in the SEO industry, it has a pretty UI and will spider a site and find all the urls (it also provides other information that may be of use, eg redirect codes, url parameters, etc) 2) website crawler software kali Linux ( skipfish skipfish -YO -o ~/Desktop/folder There are lots of tools to spider a web application (an companies which are based on this tech, eg google) short list of tools to help you spider a site (eg for creating a sitemap for SEO, or recon for a pentest or loging to define your attack surfaces) 1) screaming frog Spidering a web application using website crawler software in kali linux in it you will find lots of tips and tricks and some hacks for ical on your mac.

0 kommentar(er)

0 kommentar(er)